Understanding Artificial Neural Networks: A Comprehensive Overview

Written on

Chapter 1: Introduction to Neural Networks

Artificial neural networks (ANNs) are a subset of machine learning algorithms that draw inspiration from the structure and function of the human brain. In today's world, AI driven by neural networks is ubiquitous, playing a vital role in various applications such as language translation, facial recognition, text summarization, autonomous vehicles, and speech recognition. It's hard to imagine modern technologies, such as the Apple Pencil or voice assistants like Siri and Alexa, existing without the influence of neural networks.

To effectively engage with this technology, it’s crucial to familiarize ourselves with some foundational terms and concepts. Although neural networks may seem intricate at first glance, they can be understood with a basic overview of how they operate.

What is a Neural Network?

As François Chollet explains in his book, a neural network represents an effort to automate cognitive tasks typically performed by humans. ANNs are essentially the engines of this automation effort, designed to replicate the functions of the human brain for tasks where traditional algorithms fall short. Despite being a hot topic in recent years, the concept of ANNs was first introduced in the 1950s, initially referred to as a perceptron.

Understanding Perceptrons

A perceptron is a simple form of a neural network consisting of a single layer. It processes a set of inputs through mathematical functions to produce an output. However, the reason why perceptrons are gaining popularity now, despite their long history, is that advancements in computational speed and the availability of large datasets have created new opportunities for their application.

The Basic Structure of ANNs

Artificial neural networks comprise numerous neurons and the connections between them, modeled after the human brain's architecture. Their primary goal is to replicate brain functionality, allowing us to classify data, make predictions, and more.

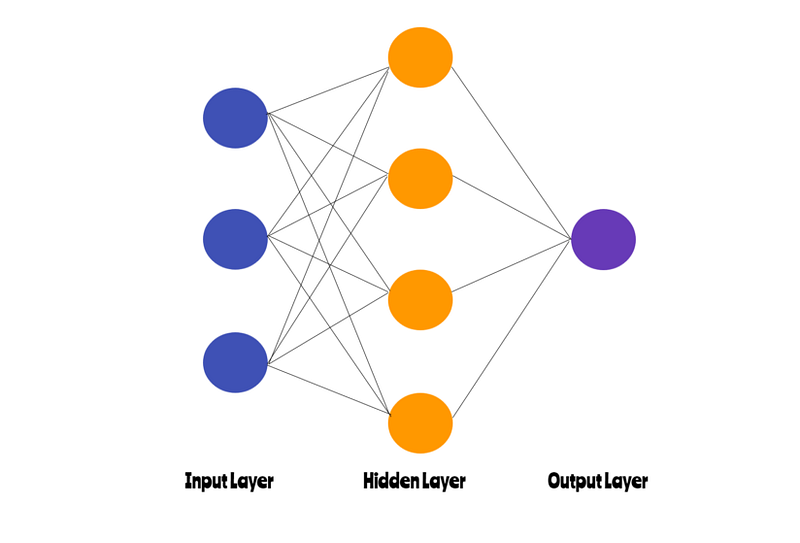

The diagram above illustrates the essential architecture of an ANN, which serves as the foundation for more complex systems. Here’s a breakdown of its components:

- Input Layer: This layer receives input data and forwards it to the next layer.

- Hidden Layer: This is where the learning takes place, as these layers discover the relationships between inputs and outputs.

- Output Layer: The final layer provides predictions based on the given inputs.

Before delving deeper, let’s clarify some common terminology:

- Neurons and Connections: Neurons are the primary units of an ANN, each receiving inputs and producing a single output sent to the next layer. Connections between neurons have associated weight values that denote their strength.

- Weights: These values are crucial for the learning process, indicating the importance of each neuron. Optimizing weight values is central to ANN learning.

- Learning Rate: This parameter dictates how quickly the weights are updated during training.

- Activation Functions: These introduce non-linearity into the network, simplifying complex relationships.

- Loss Function: This function assesses the error of a single training example, while the cost function averages the loss over the entire training dataset.

How Neural Networks Learn

Let’s simplify the explanation of how ANNs operate. Initially, the network's weights are assigned randomly, leading to inaccurate predictions and high loss scores. To improve accuracy, we train the network by adjusting these weights based on input data.

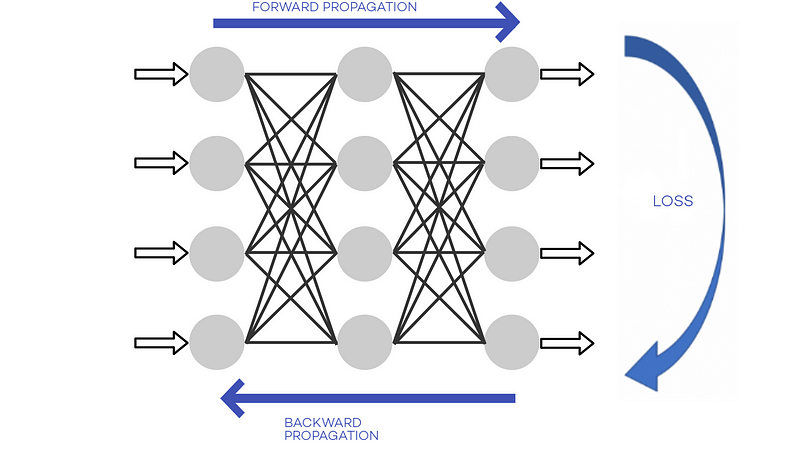

Training involves two main algorithms:

- Feedforward: This calculates the predicted output.

- Backpropagation: This updates the weights to reduce the loss score, serving as the core algorithm for ANNs.

In essence, the training loop begins with input data and random weights, followed by the feedforward process that generates predictions. The loss score is computed by comparing predictions to actual values, leading to backpropagation, which adjusts the weights based on calculated gradients. This cycle is repeated multiple times over numerous examples, enabling the network to learn optimal weights for accurate output predictions.

Exploring Deep Learning

Deep learning, a specific branch of machine learning, utilizes multi-layer architectures in ANNs. While perceptrons represent the simplest form of a neural network, deep learning involves complex systems with multiple hidden layers. The term 'deep' refers to the number of these layers.

Although the concept of perceptrons originated in the 1950s, deep learning has only gained significant attention in recent years due to advancements in computational power. Since the 2010s, deep learning algorithms have achieved remarkable results in various perceptual tasks, including voice recognition and autonomous driving.

Applications of Deep Learning

Today, deep learning is attracting widespread interest and investment across industries, with applications spanning:

- Virtual Assistants: Voice-activated technologies like Siri, Alexa, and Google Assistant exemplify popular uses of deep learning.

- Self-Driving Cars: Companies like Uber, Waymo, and Tesla are pioneering autonomous driving technology, although challenges remain.

- Entertainment: Platforms like Netflix and Spotify leverage deep learning to tailor recommendations for users.

- Natural Language Processing (NLP): Applications in document summarization, question answering, and text classification demonstrate deep learning's versatility.

- Healthcare: Deep learning is revolutionizing the medical field, enhancing processes from diagnostics to drug discovery.

- News Aggregation: Algorithms help filter fake news and protect user privacy online.

- Image Recognition: This application focuses on identifying objects and people within images.

As Andrew Ng once noted, worrying about the potential of superintelligent AI today is premature; we have yet to fully develop foundational systems. While the future of AI and deep learning remains uncertain, the trajectory points toward more integration into our daily lives, similar to the internet's evolution.

Stephen Hawking famously said, “Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.”

References:

- Deep Learning with Python — François Chollet

- Deep Learning (Adaptive Computation and Machine Learning series)

The first video titled "But what is a neural network?" offers an introduction to the fundamentals of neural networks, exploring their significance in modern AI.

The second video, "Intro to Machine Learning & Neural Networks. How Do They Work?" explains the mechanics behind machine learning and neural networks, providing insights into their applications and functioning.