The Unseen Value of Human Judgment in AI Predictions

Written on

The Human Element in AI: A Deep Dive

In recent weeks, I've observed an intriguing phenomenon. Despite our admiration for the advancements in Artificial Intelligence (AI), many of us take pleasure in witnessing its shortcomings. This tendency is not entirely surprising; humanity has held a dominant position in the natural order for so long that the notion of being outdone by machines feels foreign.

AI evokes a sense of unease, reminiscent of themes in films like The Terminator and The Matrix, which complicate our acceptance of these technologies. This anxiety isn't limited to the layperson; even those versed in technology often share these sentiments. After all, we are inherently human.

But what defines humanity? For some, it is the belief in an ineffable "human factor" that distinguishes us from AI and machines. Philosophers and data scientists have long debated the existence and significance of this human element. Is it truly attainable?

A Match to Remember

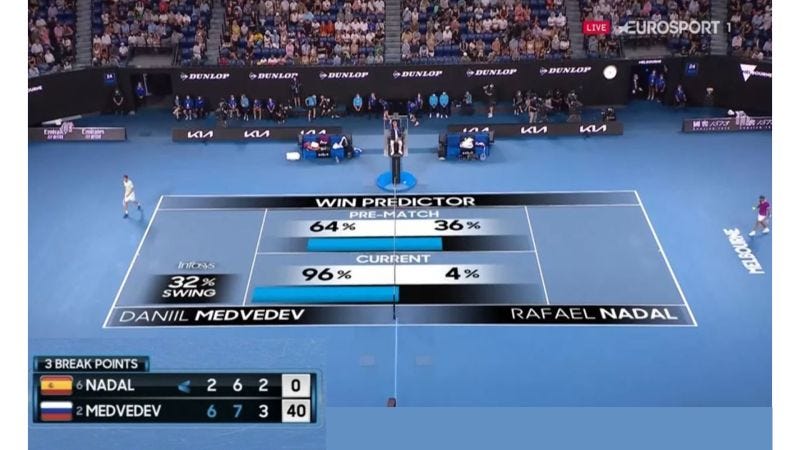

What could a tennis match possibly have to do with AI? Allow me to set the scene. Recently, Rafael Nadal claimed his second Australian Open title, a feat that made him the first male player to secure 21 Grand Slam victories. This triumph came after he battled through a serious injury, finding himself down two sets in the final match. As his chances of victory dwindled, a graphic displaying AI predictions appeared on our screens:

Prior to the match, Nadal's probability of winning stood at 36%, but as the match progressed, it plummeted to just 4%. Yet, against all odds, Nadal emerged victorious.

For his fans, especially those from Spain, this match was an exhilarating spectacle. His resilience captivated viewers for nearly six hours, culminating in an unforgettable win.

Celebrating Human Spirit

Once the match concluded, social media erupted with congratulatory messages. Nadal's victory not only solidified his legendary status but also symbolized the triumph of human determination over AI predictions. Many tweets highlighted the remarkable essence of the human spirit, even coming from those within the tech and data science fields.

The prevailing sentiment? While AI predictions can be entertaining, proving them wrong is a cause for celebration.

Investigating AI Predictions

In response to the discussions online, some technology professionals pondered the potential flaws in the AI model that generated those predictions. Did it overlook crucial data? Was Nadal's injury overemphasized? Perhaps the algorithm failed to account for Nadal's impressive history of comebacks.

While I cannot confirm the specifics of the data used, the inaccuracies of the algorithm reignited an age-old debate: is there a genuine human factor at play?

Research from MIT Sloan reveals that individuals can make vastly different decisions based on identical AI inputs. Sometimes these choices surpass AI predictions, while other times they lead to distinctly human errors.

So, how do we ascertain whether incorporating a human factor into our algorithms will enhance their performance? And how do we effectively integrate this element? Currently, definitive answers remain elusive. Until we find them, a prudent approach may be to merge AI predictions with human oversight.

Collaborative Decision-Making

I recently encountered an insightful interview with Jeff McMillan, Chief Analytics and Data Officer at Morgan Stanley Wealth Management, who advocates for a close partnership between humans and algorithms. He believes that Machine Learning (ML) should augment rather than replace human intelligence.

As Patrick Gray aptly notes in TechRepublic, “If an intelligent machine predicts heavy rain, most people will likely grab an umbrella.” This doesn’t guarantee rain, nor does it imply that everyone requires an umbrella. The essence lies in the validation of AI predictions by human judgment.

Consider the issue of bias: We certainly don’t want our algorithms to exhibit discriminatory tendencies. Addressing biases is complex, and human intervention is crucial to prevent these biases from being learned and perpetuated by AI.

A Practical Example

This collaborative approach is already evident in contemporary laboratories, where scientists utilize algorithms to enhance the efficiency and success rates of their experiments.

Looking Ahead

The future of AI remains uncertain. Nevertheless, deploying algorithms in real-world scenarios without human oversight poses significant risks, particularly with intricate models that few can fully comprehend.

The apprehensions surrounding AI are understandable; many perceive it as an incomprehensible threat. This perception is likely to persist for some time. Data scientists must lead the charge to ensure that companies do not hastily adopt AI without adequate consideration.

For the moment, developing algorithms and processes that bolster human decision-making appears to be the most prudent strategy—one that promises to advance human progress in a responsible and sustainable manner.

For further insights, consider subscribing to my newsletter for more articles delivered directly to your inbox. If you're interested in unlimited access to Medium for just $5 per month, you can support me and other writers through my referral page.

Chapter 2: The Future of AI and Human Collaboration

The first video titled "Why This Wave of AI Will Fail" discusses the potential pitfalls of AI development and the reasons behind its anticipated failures.

The second video, "When AI Detectors Fail (Spectacularly) and OpenAI's Five Steps to Skynet," explores the shortcomings of AI detection systems and the risks associated with unchecked AI advancements.